What feature should you work on next?

When the product owner provides a well-groomed backlog, you may think selecting the next feature to work on is obvious, but often it isn’t. A development team should consider many factors before picking an item from the product backlog to work on.

Here are some examples of how features may be selected for development:

- Product Owner in Charge. In some contexts, [what is a context?], the development team is treated as an assembly line to deliver features, and the development team has little or no input into which features they will work on. A drawback of this context is that the development team isn’t allowed to provide an alternative sequence of features that could minimize development costs and increase throughput and quality. Additionally, allowing the development team to have input on the decisions can increase their commitment to completing the features as well as strengthen their “buy-in” to the product and process, which is known to increase morale and quality.

- PMO in Charge. In some contexts, the development team supports several products and may work on features for a different product each iteration. In contexts where the development team has some input into feature selection decisions, it is often beneficial to the team to establish a Product Management Office (PMO) that works with each Product Owner to get a consensus agreement from the Product Owners choosing which feature, which Product Owner’s product, will get some development time during the next cycle. When the delivery team works on feature requests directly from customers, the team may find it helpful to establish a customer advisory board (CAB) that receives most of the large requests and provides input and direction to the development team about which features will be most valuable for most clients.

- Too Many in Charge. In some contexts, development teams switch from feature to feature as their bosses, managers, and clients demand they stop working on one feature and switch to another to satisfy the demands from a specific customer or the whims of a forceful product owner or manager. Many drawbacks and inefficiencies exist in such a context. Developers are often demoralized by the inability to finish what they start so output is below expectations and quality is poor. Context switching wastes time as developers need to re-acquaint themselves with something new and lose the design ideas that existed only in their heads for the features they had been working on.

- Waterfall. In non-agile environments, new projects and feature requests may get delivered to the development team(s) with the expectation that an expected delivery date for the feature will be provided soon even though none of the Product Owners have an idea of the current backlog for the delivery team. Equally unfortunate is the fact that the delivery teams may stop the feature development on other products when the new request comes in because they are more interested in learning what the new feature is, and they will spend time doing some design and development on it to return an estimate of the expected delivery date.

- No One in Charge. In contexts without delivery expectations or engaged product owners, developers may drift into spending a lot of time developing features that are very unlikely to ever be used, or in developing features completely different than what the product owners expected. Additionally, developers may context switch often, working on one feature for a while and then another, leaving them with much started and nothing completed when the product owner eventually asks to see some results.

- Customer in Charge. Some contexts have a particularly demanding or upset client that seems to be dictating the features a team will deliver. I once worked on a team where we obtained a new client (I will call them ACME), our largest client ever by far, and they soon demanded software improvements to help them meet their needs. So over the course of two years most of the features we delivered were those requested by ACME. We even joked that our developing methodology was ADD (ACME driven development) as opposed to Business Driven Development (BDD) or Domain Driven Development (DDD).

- Development Team in Charge. Finally, in contexts where the team wants to work on the features with the greatest return on investment (ROI), developers often collaborate with product owners to calculate the ROI of each feature, providing a value for the feature from the perspective of those who will be using the software, and the developers providing an estimate of the difficulty to deliver the feature and the risks involved. Minimal risk, easy to develop, high value features are usually developed first; and high risk, difficult to develop features are delivered later even if their value is high. The textbook formula for ROI is value divided by development cost; and those with the highest ROI scores should be worked on earliest.

The Optimal Context

The optimal context for obtaining the best ROI from software development is a context in which the development team wields the majority of the power to decide the sequence in which features will be developed. The reason for this is the development team has the best knowledge of the changes needed to implement a feature and can suggest the best sequence of feature delivery to minimize development costs. In a healthy context, the development team will desire input from the product owners to identify the features with the highest value and attempt to deliver those first, and the product team will understand and usually be happy with the decisions made by the development team.

However, there are some contexts in which someone other than the development team can make better decisions about what to develop next. One context is during chaos caused by bad software that needs to be fixed immediately or important clients and customers threatening to leave if their demands are not met quickly. Another context is when the team is comprised of junior developers or people new to a product. In this latter context, it may be best to allow the product owner to determine the sequence in which the team will work on features until the team has learned the software architecture, development processes, and product domain.

Return On Investment (ROI) for Feature Selection

Although several of the contexts listed above may include the ROI (value/cost) when assigning the priority of feature development, the last context, Developer in Charge, is usually the context that provides the most accurate ROI calculation. The reason for this is that both the value and cost of a feature not yet developed change over time, but, with exception of time-sensitive features, the costs of a feature usually change more significantly than the value. The cost of developing a feature can be affected by many factors including:

- The sequence in which features are delivered. In some cases, Feature A may be estimated to cost 100 hours to develop, and Feature B may be estimated to cost 40 hours to develop after Feature A. But if the sequence is reversed, and Feature B is developed first, B still costs 40 hours, but Feature A can re-use some of the code developed by Feature B and Feature A only costs 80 hours to develop.

- The specific individuals assigned to do the work affect the cost, often substantially. A feature assigned to a junior developer available at the time may take 100 hours, whereas a senior developer may be able to deliver the same feature in 20 hours. Waiting for the senior developer to be available at the next iteration may be more efficient.

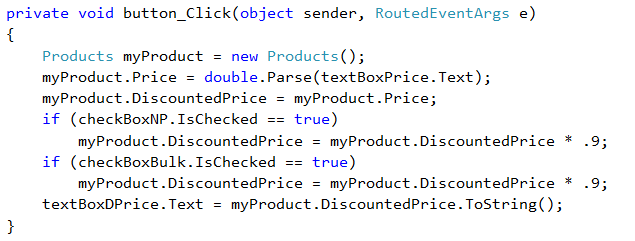

- As developers write code and learn new technologies they sometimes discover a new approach to implement a feature that was previously not considered. The new approach may not only take less time, but also result in higher quality. This is one reason for delaying many technical decisions until you have more knowledge.

Hopefully, it is obvious that delivering the features with the highest ROI is most valuable to the company. If your organization is not using ROI as a factor in choosing what to work on next, then your team may be wasting time (as explained in Lean/Agile development philosophy), by not doing what is most valuable first. Allowing the development team to be the primary selector of the sequence in which to develop features may require a change in your team and company culture, and how management hierarchies are organized; but it may be necessary to become more efficient.

Challenges of Calculating ROI

At this point you may accept the premise that choosing features to work on based on their ROI is so sensible that everyone should use this approach, but the reality of software development is much more complicated. First of all, determining the ROI of each feature is very difficult. Developers know that estimating the time to deliver a feature is difficult but assigning a dollar amount to the value of a feature delivered may be even more difficult. So not only are both the numerator and the denominator of our ROI calculation fuzzy numbers, there is also a lot of room for variance within those two values. What is the ROI for delivering an order entry screen without date pickers for the date fields versus an order entry screen with date pickers for the date fields? What is the ROI of delivering a grid full of data that lacks the ability to filter the data versus a grid full of data that can filter the data? What is the incremental development cost of adding each desired sub-feature to the feature? Can you first deliver a basic set of features and the later deliver the enhancements? All these options and variables make ROI calculations for a single feature very subjective.

Challenges for using ROI for Feature Selection

- You need to take time to perform ROI calculations and record them with the feature in your tracking system.

- You need to have the people that best know how to value the feature from the customer perspective available to provide an estimated feature value.

- You need to have the people that can best estimate the cost and risks of delivering the feature available to spend time to calculate an estimate.

- The value of an undelivered feature can change over time as market and user needs change or as other options become available that help customers and users resolve their business needs.

- The effort required to deliver a feature can change over time as the skills and knowledge of the developers change, but also as the technologies and frameworks of the software evolve and improve.

- The cost to deliver some features may be reduced when the feature is delivered along with other features. Imagine having three separate requests to add a checkbox for different purposes to the same user interface, and the value of the first checkbox is deemed very high, but the value of the other two checkboxes is not. Each has the same development cost to deliver. However, if all three are delivered together the development costs decrease significantly because all three database changes can be made at once, and all three business object changes can be made at once, and all three UI changes can be made at once. Therefore, given that the cost of implementing checkboxes two and three, when implemented along with checkbox one, is reduced significantly, the ROI of checkboxes two and three would increase and it would make sense to deliver them along with checkbox one.

- Another reason to implement features with lower ROI is to use them as a test case for a new technology. Perhaps you know you want to start encrypting data for millions of transactions, a feature with a lot of risk if it is delivered incorrectly. Therefore, you choose a lower priority feature from the backlog that also needs to encrypt data, but only in a small number of use cases. You can implement the feature with the lower ROI first to work out the difficulties of implementing the new encryption technology before using it on a more important feature.

- There are more reasons for choosing to work on features that don’t have the highest ROI, and some of those reasons include:

- You have a developer or two that need to be assigned some work, but the developers don’t have the skills to tackle the features with the highest ROI.

- You have a junior developer or intern, and you want them to comfortably work on some features that will help them best learn your software and development processes; or you want them to work on some features that won’t have a significant impact if the developer makes a mistake.

- ROI includes a time component. Value may decrease over time. One feature may provide a greater ROI initially, but another feature may provide a greater ROI over a longer time period. If a feature is working poorly in Windows 8 but works perfectly on Windows 10, the longer you wait to deliver the feature the less value the feature will provide when finally delivered because more of your customers have migrated to Windows 10.

- ROI value may increase over time. We might estimate the value of a feature to be worth $50,000, but it may be more accurate to say that the feature will increase our revenue by $1,000 per day, thus its value is increasing over time. So, when we calculate the value for ROI and compare the ROI for two features, we need to consider the timeframe of the ROI for each.

- Developers often prefer to work on features that provide the best ROI in the long term, which can include rewriting some old, buggy, difficult to maintain software using current frameworks and technologies. But if customers are angry and demanding the bugs get fixed ASAP, it may be best to spend time fighting the current fires, knowing that the very code you are fixing will be thrown out and replaced within a few months. It feels like a waste, but you must survive the short term to exist in the long term. If that phrase doesn’t make sense, consider this analogy: Fighting global warming may be the most important challenge we face and where we should focus our resources, but if the largest nations in the world are on the brink of a nuclear war, then focusing resources on resolving that challenge in the short term becomes more important than fighting global warming; because if don’t deal with the short term problem, no one may survive to address the long term problem.

Should the Development Team Do Activities Other Than New Features?

Some readers may feel there is a lot to consider to effectively use ROI to drive feature delivery but using ROI to determine what a development team does next is only part of the equation. There are activities you may find more valuable to your development process to work on instead of developing new features. If you have adopted a Continuous Process Improvement mindset, then, by definition, you believe you should often spend time considering how you can improve your processes; and in this context how you can improve your software development processes. What if you could make a change to your software development process that would increase delivery speed of new features by 50%? I suspect you would want to implement that change. But that change is not a new feature. It is more likely a DevOps change or a significant refactoring of the code, or a process change such as adopting Kanban. Regardless of what the change is, the change is likely to require time from your developers to implement and adopt. That means that instead of developing new features, your developers are going to spend their time on other activities. We now realize that there are activities other than developing new features that your development team should consider spending time on. Specifically it means that your software product and delivery process will get a better ROI when the development team performs activities like improving DevOps, fixing bugs, refactoring code, training, writing unit tests, and writing developer documentation than when they are developing new features. This is not to say that these activities always, or even most of the time, provide a better ROI than delivering new features, but it does mean that when the decisions are being made about what the development team should do next, the decision makers should not be considering only new features to deliver.

Evaluating the ROI of Possible Development Team Activities

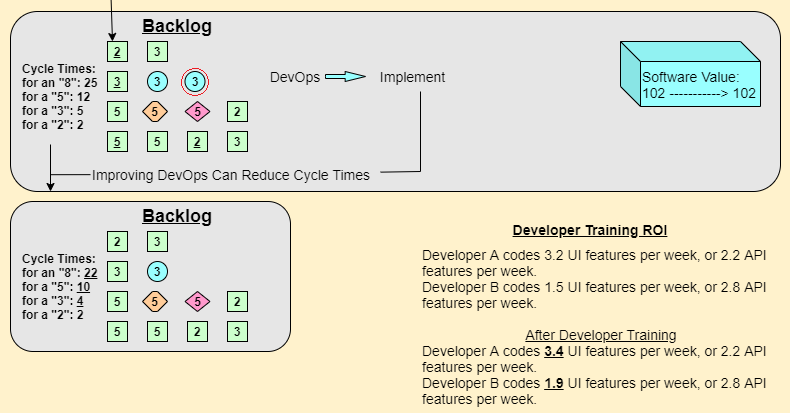

The following diagrams provide examples of a backlog of activities that a development team could perform. The backlog has four types of items: New Feature, Fix Bug, Refactor, and DevOps change. In the upper half of the first diagram, the team chooses to implement a New Feature that has a development cost of 2, and the value perceived by the customer receiving the feature is 12 (100 – 88).

In the lower half of the above diagram, the development team chooses to fix a bug. The bug has a cost of 3 and increases the customer’s perceived value of the software by 2 (102 – 100). For both developers and businesspeople, the examples above are easily understood.

The following examples attempt to convey the value of Refactoring, DevOps Changes, and Training using the same principles of ROI.

In the upper half of the diagram below, the development team chooses to refactor some of their existing code. This code is delivered to production, but the customer perceives no value in the change (102 – 102 = 0). From the perspective of the customer, the features of the software have not changed (although in some cases refactoring may include noticeable performance or security improvements). But what you should notice is that the cost of several items in the Backlog changed after the refactoring was implemented. The most common effect of a successful refactoring is that delivering future features becomes easier. Of course, as with ROI calculations, it is difficult to estimate the impact on cost that a refactoring will have on other features. Additionally, refactoring code to support a newer framework or technology may be beneficial to changes made a year or more in the future, but not particularly valuable to the other features in the immediate backlog.

The lower part of the above diagram begins to show the impact of a change in DevOps. For purposes of this article, a DevOps change refers to a change made to the processes and tools used to build, unit test, validate, and deliver software. A DevOps change could imply that the team took time to automate builds instead of performing manual builds, or to automate deployment to a test environment so the QA team could test the latest build more quickly and provide feedback more quickly. Regardless, the DevOps change does not (in the case) affect production, and there is no deployment. The customer does not gain any direct value from the DevOps change. But, like refactoring, the DevOps change has a positive impact on subsequent development as shown in the diagram below. In this example, the Cycle Time, the speed at which a feature goes from “started” to “delivered” was reduced for most features developed after the DevOps enhancement. By investing time to make this DevOps improvement, all future development can be delivered faster. In this specific, admittedly fictional, example, the time for a feature with a size Estimate of “8” has been reduced from 25 to 22, the time for a feature with a size estimate of “5” reduced from 12 to 10, the time for a feature with a size estimate of “3” from 5 to 4; while the estimate for a feature with a size estimate of just “2” remains at 2.

One additional activity included in the lower part of the above diagram is developer training. The impact of developers taking time for training, such as learning new design techniques, coding techniques, or development product features, may be to increase the speed at which developers can produce features. So, like refactoring and DevOps enhancements, a team may consider developer training to be the activity that it is most valuable for a development team to do next.

Doing Multiple Things At The Same Time

The entire article up to this point assumes that development teams do one thing at a time. In reality, teams with more than three people usually do several things at the same time. Most teams, even many Kanban teams, will pick several features, bugs, and other activities to work on over the course of a few weeks, or during the next iteration. One benefit of doing multiple activities is that some team members are usually always working on new features, which is what product owners and customers are primarily interested in. Working on multiple activities at the same time allows some feature progress to continue even as other team members spend months on DevOps or refactoring activities.

Recap

- There is value in understanding who decides what the development team will do next.

- There is value in recognizing that the development environment context strongly influences who makes that decision.

- Return on Investment (ROI) should be a primary factor in determining what is done next.

- Developers usually can provide the best ROI estimates.

- ROI is a subjective measure.

- Activities such as refactoring and DevOps improvements may provide a better ROI than developing new features.